As 2017 might very well be the year for chatbots to go mainstream, now is a good time to discuss a way to analyse them. It turns out it’s easy to apply web-based customer journey analysis to a conversational interface. Let’s begin.

Limitations of standardised reporting

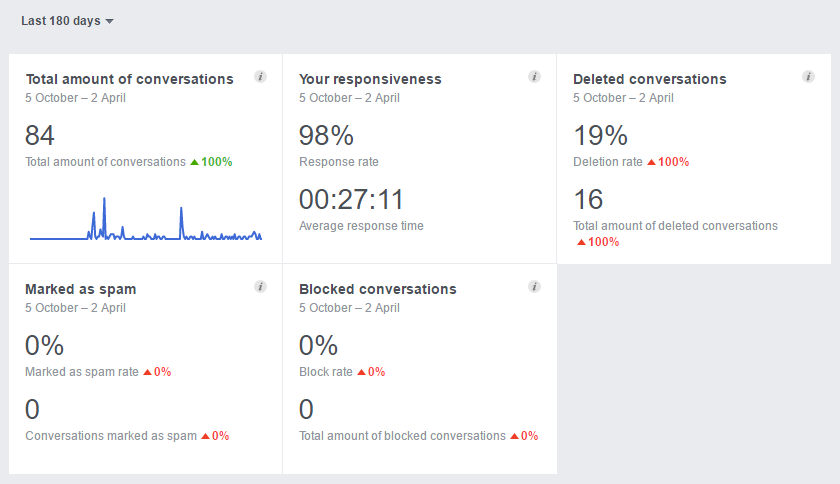

Currently, chatbot insights that platforms offer are limited. Let’s take Facebook’s chatbot reporting as an example. To analyse your messenger bot, you have access to five widgets in your Page Insights:

Facebook Messenger Bot reporting.

As you can see, you’ll get only high-level insights into conversations. And timewise, you’re limited to only the timeframes Facebook offers:

- Today

- Yesterday

- Last 7 days

- Last 28 days

- Last 180 days

That’s it. For any further analysis, you’ll have to manually dig into your chats. Add Facebook’s measurement mistakes, and we have two good reasons to take chatbot data matters into our own hands.

First, let’s look what chatbots will be used for.

The state of chatbots

Currently, chatbots often allow users to execute a single task through a conversational interface.

- Poncho: a weather forecast bot (try it, it’s fun!).

- Burger King bot (demo): order a burger (or other meal) through messenger.

- IT support bot (demo): solve IT problems through messenger.

In these examples, users will start a conversation to complete a task. And just as with good old websites, they’ll follow a journey through an interface.

How to analyse a conversational journey

As a starting point, it’s good to know that we can apply customer journey data collection to a conversational journey quite easily. On websites, we group a set of pages as micro conversions (e.g. product detail pages) and end goals (e.g. a thank you page). With conversations, all we need to do is replace a set of pages with a set of user inputs and we’re able to properly report on a bot’s performance.

User input categorisation

A chatbot session generally includes 3 steps:

- Stating the desired result: the user states a question or a task he wants to complete.

- Input gathering: the chatbot asks the user for any missing input.

- Task completed: the task is completed.

These three steps allow us to categorise user input and help us define what data we need to measure chatbot performance. The steps allow us to calculate the completion rate for each input category. They tell us how many of our chat sessions result in a completed task (3).

Besides tracking how well your bot performs, we should add a secondary metric: the effectiveness. We can capture a chatbot’s effectiveness with time: how long does it take to complete the desired task? So for each user input category that we’ve mentioned above, we’ll answer two questions:

- Do users reach step X?

- How long does it take them to reach step X?

Let’s look at the three example bots and categorise user input:

Poncho

- Stating the desired result: User wants to know the weather forecast.

- Input gathering: User fills out location details.

- Task completed: User gets the forecast.

Burger King

- Stating the desired result: User wants to order a meal.

- Input gathering: User fills out desired meal information.

- Task completed: User has ordered a meal.

IT support

- Stating the desired result: User reports a problem.

- Input gathering: User shares problem details.

- Task completed: User has solved the problem.

Depending on the complexity of the task, you could set up a more detailed breakdown of phase 2. In the Burger King example, you might have a step for burger selection, side dishes and drinks.

Considerations

Personally, I’ve created a chatbot that has had about 90 sessions. Though the numbers are low, an analysis on these sessions taught me three things:

1. People say hi to your bot

Previously, we’ve discussed that most interactions fall in one of three categories. This would mean that a super effective conversation with your bot includes only 3 steps. But in a chat interface, people will act as they would with real people: they will greet your bot. You might consider limiting the effectiveness measurement to the time between step 1 (stating the desired result) and step 3 (task completion). You might also consider capturing a greeting as a step 0.

2. People will test your bot limits

Second, people get creative with their input. They will test your bot’s flexibility. So besides standard steps 0 to 3, categorising all other data as ‘other input’ will tell you if these type of reactions are triggered a lot. If so, you can drill down on this category to see if you should add new categorisations for user input.

3 The end is not the end

Lastly, keep in mind that the end is not the end. When your bot answers the user’s initial question, the user doesn’t always confirm. The user may just leave the chat and use the information without ever telling you (there’s no good old trusty thank you page). The second to last step may very well mean the end of the conversational journey.

One way to take this into account is by analysing how many steps it takes a user to complete a task. If the average is five, a conversation with four steps without the confirmation might be considered as completed as well. Another option is asking the user for confirmation: "did this chat help you?"

The future

Last Saturday, during the unconference day Measurecamp in Amsterdam (which is awesome), we’ve discussed the impact of the conversational interface on data. What will the future of conversational measurement look like? A few things came up when talking to others in the field:

- Data mining: as we move from graphical interfaces to conversational interfaces, the value of mining texts will increase. There will also be more text to mine.

- Contexts: assistants can already determine the context of a user’s input (so you don’t always have to do manually).

- Brands: chatbots are in a weird spot right now. They are currently used as an extension of your brand, they may become the only way users communicate with your brand, and may even become just a single data point when an AI assistant (from Google, Facebook or Amazon) requests data from your brand. How do you analyse that single data point? What will a data analyst’s job look like?

- Emotions: emoticons add emotion to text. When we move from graphics to text to speech, emotions will go straight from you into the computer. No way to hide your feelings (without good acting skills that is).

Besides this, I also noticed that chatbots are fun. Speaking from both other people’s experience and my own: there is great fun to be had when you start seeing real people interacting with your chatbot.

Next steps

For now, we know how to analyse a conversational journey and we can start measuring a chatbot’s performance. And if we know how well it performs, the fun stuff begins: optimising your chatbot with different types of responses. My colleague David has written a great post that explores conversational optimisation.

Leave a Reply

You must be logged in to post a comment.