Online ads are getting more and more personalized. To a large degree, this is made possible because of the huge amounts of data gathered online. Using this data, smart algorithms are able to tailor ads to a specific person’s interests and needs. What if we would be able to personalize ads on the same level in the offline world? The data is available, so what is holding us back?

Facial recognition

Our social robot experiment is aimed at establishing a solid connection between the online and the offline world. The robot offers an interface to make use of the online data in an offline presence. Besides that, it offers the possibility to generate data itself by measuring and analyzing offline behavior.

The first step in linking data to a particular person is to simply recognize who this person is. We decided to use facial recognition as our identification technique. Facial recognition systems are computer applications which are able to identify a person by a digital image. This technology offers a non-intrusive way of identifying someone without requiring any other input besides their presence.

How does it work?

Computers are able to distinguish between human faces through the usage of different sets of algorithms. They will start off by identifying a face within the frame. This is done by using Haar feature-based cascades.

After a face has been detected, another algorithm will extract the facial features of the face and convert these to a set of values. This set will then be compared to the existing dataset of scanned faces and, subsequently, a face is identified when a positive match is found. Knowing these basics, it is fairly easy to set up a similar system yourself. And so we did!

Getting started

Robin (the robot) comes equipped with a facial recognition module provided by OMRON. This module contains the above-mentioned algorithms and allows you to learn and recognize faces. Similar software can be found in a vast amount of various programming languages, where most of them will make use of the openCV library.

Once you have determined the software, it paves the road to establish a dataset. Detected faces will be compared against the faces the robot learned before. Hence, it is crucial to create a reliable dataset as a sound foundation for your facial recognition system.

When taking the pictures, make sure to rule out any risks and factors that may confuse or contaminate the software:

- Use an equal (preferably white) background

- Make sure all facial features -e.g. eyes, nose and mouth- are visible

- Face the person straight towards the camera

- Emulate the same lightning conditions as where the system will be used

- If people always wear glasses and/or hats, keep them on

After you have established your dataset you can start recognizing faces.

##Improving results

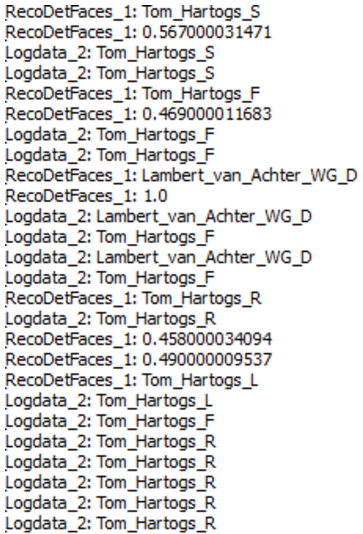

The OMRON module will only return the name of the person when a positive match is found. When digging a bit deeper, however, you are able to extract a certainty value for each match. In case you are getting a lot of false results you can choose to raise this value. While you are now able to recognize faces, you will notice that it is not yet very robust.

###Angled view

With this technique of facial recognition you do NOT create a 3D representation of a person’s head. This means that whenever a person is facing another direction than straight into the lens, the software will have a hard time recognizing the person.

To counter this problem, we took six different photos from each person. In the first five photos, the person was instructed to face different directions (up, down, left, right, straight). In the last photo, the person was asked to smile. The robot will be able to recognize the photo and link it to the correct person. Now, it does not matter anymore from which perspective the robot views the person or which expression is on the person’s face.

False hits

Unfortunately, we were still receiving false hits. This happened mostly when a person was in motion.

Based on this, we decided to deploy an on average solution. Whenever a face is detected, it will receive its own unique identifier. Every time a hit for this face is fired, the certainty value is added to the corresponding name label. After ten hits, the name label with the highest score will trigger the output. By using the certainty value to calculate the averages, hits which are more certain will have a stronger impact than those with less certainty.

Some faces -i.e. wrong matches- kept on appearing all the time nevertheless. These faces would have scores of either 100% or have the same exact score multiple times in a row. Luckily, this allowed for easy counteractions by ignoring the scores that appear to be too perfect and only saving scores if they were not equal to the previous one.

While studying the pictures which led to the false hits, we were able to spot returning characteristics. The main problems were reflecting areas, like glasses and bald heads as shown below.

Other returning characteristics were badly/partly visible facial landmarks -e.g. an eye. Since there are six pictures of each individual in the dataset, we decided to simply remove the pictures containing any of these flaws.

Final result

After adding the previously mentioned improvements, the software outputs stable and confident results. We are now able to successfully identify people with facial recognition! The next step consists of performing personal actions after identification, about which we will write in Part 2 of the Personalized offline ads series.

In the meantime, you can follow Robin on instagram to watch his progress and stay up to date about his journey and his endeavours to connect the offline and the online world!

Leave a Reply

You must be logged in to post a comment.