In the new age of AR, they way we connect with consumers is changing. We’re looking through our screens instead of looking at our screens. Physical interactions and custom experiences are great ways to implement storytelling and immerse people in new surroundings. During the Facebook F8 summit, Mark Zuckerberg presented a lot of exciting AR news for creatives and developers to go play with. Before diving into the F8 update, I want to talk about the journey we at We Are Blossom had up until the update.

In the beginning

As of September 2017, we could develop AR filters for use in Facebook camera. At the time AR studio for Facebook was in a closed beta phase, but we were fortunate enough to be included in the process. During this time, we (WeAreBlossom) developed some prototypes for the musical The Lion King, Sportlife and internal marketing purposes to see how this tool would work out. The tool was clearly in an experimental phase. Many of the functions, like normal maps, plane tracking, joint animation, visual coding, were closed and were slowly added to the pipeline. The dedicated AR team of Facebook updates their tool monthly with new exciting features so after a few months the tool was released to the public. It now can be used commercially and is semi-complete with features you expect to have.

First case: KPN

After this exploring phase we contacted KPN and proposed a Facebook filter to promote their ongoing campaign for the ice skating winter Olympics. The user could experience the sensation of being an ice skater themselves, with official attire and a Dutch flag being hoisted behind them as if they won a medal. Adding physical interactions is key for an experience like this. It’s fun to see how people interact with AR applications like this. They start dancing, start smiling and also an important one; they start exploring. The interactions are triggered by the user so they feel in control on how the experience should look like.

Technical problem solving

The tool only allows a maximum download size of 2MB, so this means a lot of downscaling in textures and models. You need to think smart on how to maintain the quality of the brand while keeping it scalable for the majority of phones. The KPN filter was an experience where the user would put on a body suit, so the suit should deform realistically, but the tool only tracks facial features. With the use of joints inside of the suit to control the deformation and orienting them correctly we managed to counter the movement of the neck and shoulders to move more realistically. In the example below, you can see the suit in action. Or try it yourself; KPN AR filter

What’s next?

What can we expect from Facebook in the couple of months? They did not give specific deadlines when these features will be rolled out but let’s see what to expect.

Expansion of platforms

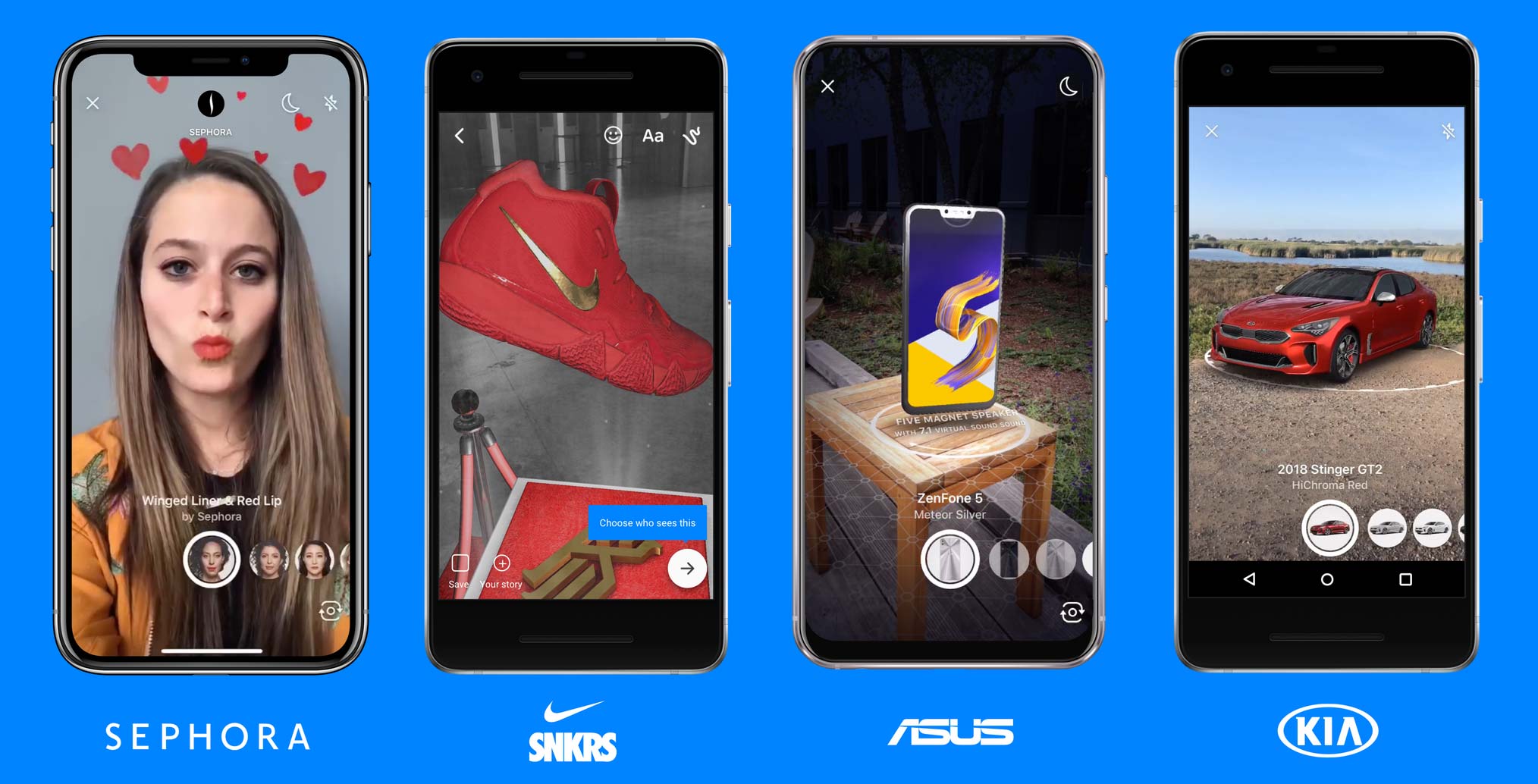

Facebook announced that their AR platform will expand to be used on Messenger, Instagram and Facebook-lite. Messenger and Instagram are somewhat different in the way you use it versus Facebook.

AR for Instagram:

Instagram on the other hand is also driving on the shareability of a post, but in a different way. Facebook is mostly data driven where information and text is key. While Instagram is image-based and about capturing moments. We expect to see a rise in different, more advanced AR content as well as simple small AR content when Messenger and Instagram start implementing the Facebook AR studio tool.

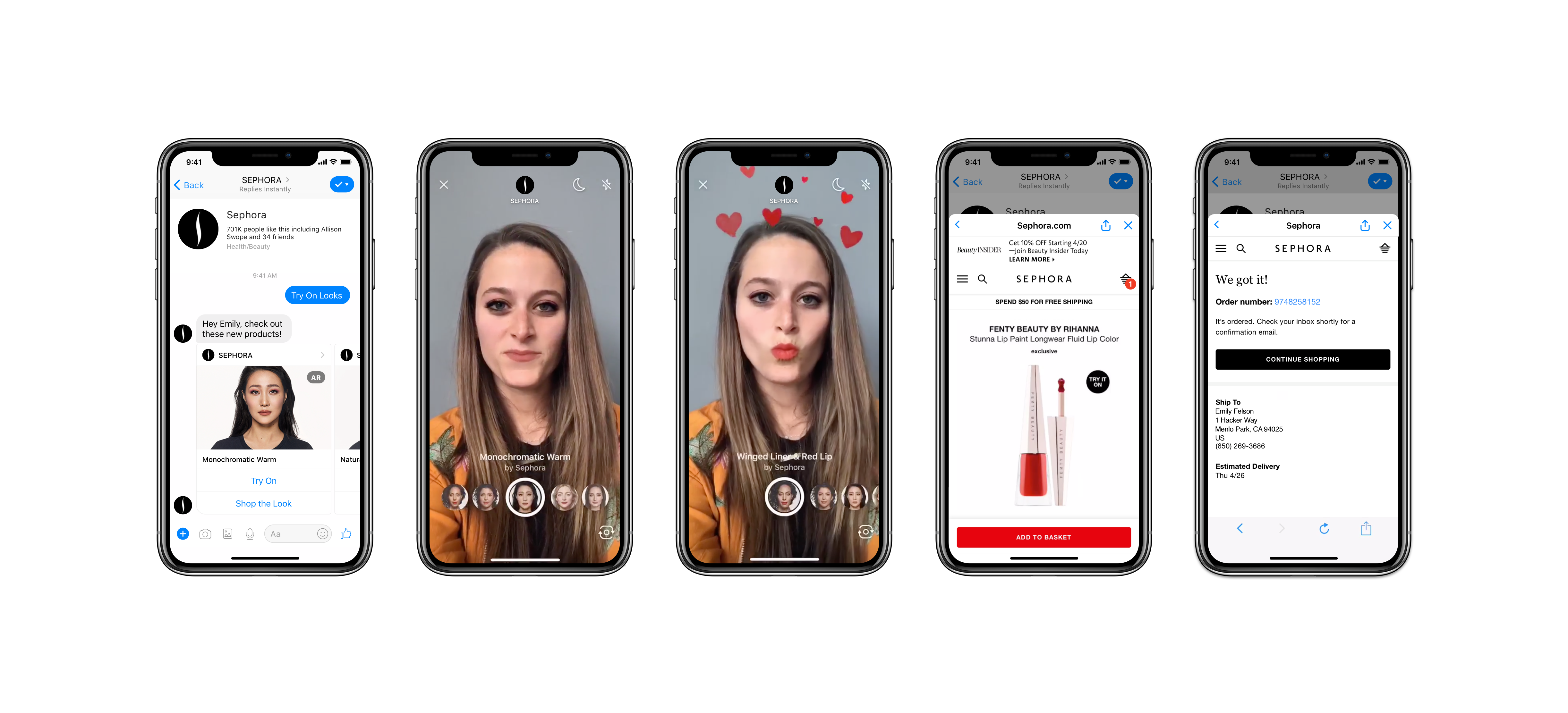

AR for Facebook Messenger:

Messenger uses information as there key drive to initiate engagement. This means that an AR filter on Messenger should comply to this format. On Facebook it’s all about the shareability of a filter while on messenger it’s more about giving information, working together with a chatbot and directly selling a product. This means a lot of product visualisations where you can view and buy the product in AR. Messenger is currently in its beta phase with integration of AR filters.

Keep it simple

Because AR studio is released on such a broad platform Facebook tries to make the tool as easy as possible. They introduced visual coding (which is a way of programming without the knowledge of JavaScript, C#, Python etc.) via the patch editor in the beginning of 2018. During that time, it was very limited in its usage but now it has developed in such a way that creatives can create complex interaction designs without the knowledge of any coding. Bearing this in mind it sounds logical that they would implement 3D import capabilities from Sketchfab where it’s the other way around. As a developer you don’t need 3D modelling skills to create an experience, but rather just download the desired model right in AR studio.

Hand- and body tracking

Facebook also plans on adding some more advanced systems to their pipeline. With more complex facial tracking, hand-tracking and body tracking for more immersive and complete experiences. The plane tracking isn’t so accurate at the moment, they are planning to implement better ways to get a better experience. Snapchat already uses ARcore and ARkit implementations for significantly better tracking.

Analytics(!)

Analytics is also going to change allot for the AR experiences, as where clients and companies can track what their AR filter is doing and get insights on how to improve their interaction with consumer to make better selling experiences. There are no actual dates as of when this will be featured but this is a key item for companies to drive their engagement.

For now, we wait and keep exploring where this journey will take us. I can only imagine the possibilities we will be able to grasp along this and next year.

Leave a Reply

You must be logged in to post a comment.